BERT HuggingFace Model Deployment using Kubernetes [ Github Repo] - 03/07/2024

Transformer Model deployment using Kubernetes

Github Repo : https://github.com/vaibhawkhemka/ML-Umbrella/tree/main/MLops/Model_Deployment/Bert_Kubernetes_deployment

Motivation:

Model development is useless if you don’t deploy it to production which comes with lot of issues of scalability and portability.

I have deployed a basic BERT model from the huggingface transformer on Kubernetes with the help of docker which will give a feel how to deploy and manage pods on production.

Model Serving and deployment:

ML Pipeline:

Workflow:

Model server (using FastAPI, uvicorn) for BERT uncased model →

Containerize model and inference scripts to create docker image →

Kubernetes deployment for these model server (for scalability) → Testing

Components:

Model server

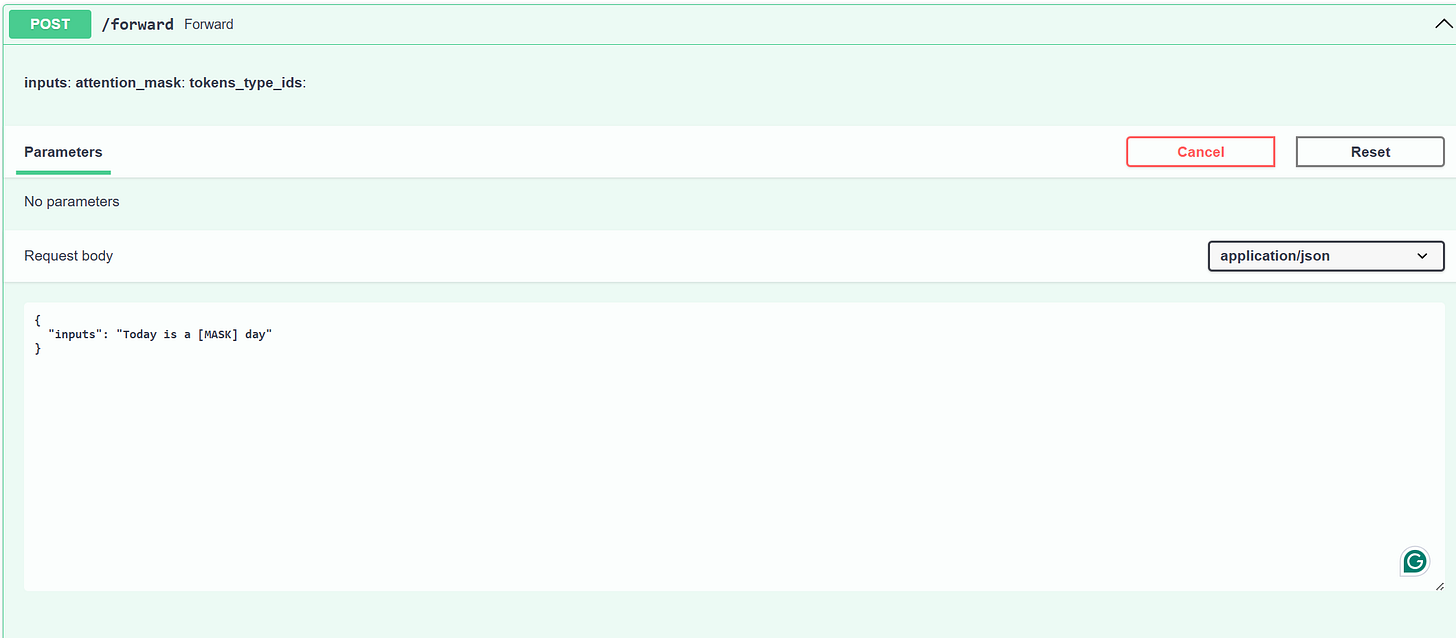

Used BERT uncased model from hugging face for prediction of next word [MASK]. Inference is done using transformer-cli which uses fastapi and uvicorn to serve the model endpoints

Server streaming:

Testing: (fastapi docs)

http://localhost:8888/docs/

{ "output": [ { "score": 0.21721847355365753, "token": 2204, "token_str": "good", "sequence": "today is a good day" }, { "score": 0.16623663902282715, "token": 2047, "token_str": "new", "sequence": "today is a new day" }, { "score": 0.07342924177646637, "token": 2307, "token_str": "great", "sequence": "today is a great day" }, { "score": 0.0656224861741066, "token": 2502, "token_str": "big", "sequence": "today is a big day" }, { "score": 0.03518620505928993, "token": 3376, "token_str": "beautiful", "sequence": "today is a beautiful day" } ]

Containerization

Created a docker image from huggingface GPU base image and pushed to dockerhub after testing.

Testing on docker container:

You can directly pull the image vaibhaw06/bert-kubernetes:latest

K8s deployment

Used minikube and kubectl commands to create a single pod container for serving the model by configuring deployment and service config

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: bert-deployment

labels:

app: bertapp

spec:

replicas: 1

selector:

matchLabels:

app: bertapp

template:

metadata:

labels:

app: bertapp

spec:

containers:

- name: bertapp

image: vaibhaw06/bert-kubernetes

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: bert-service

spec:

type: NodePort

selector:

app: bertapp

ports:

- protocol: TCP

port: 8080

targetPort: 8080

nodePort: 30100

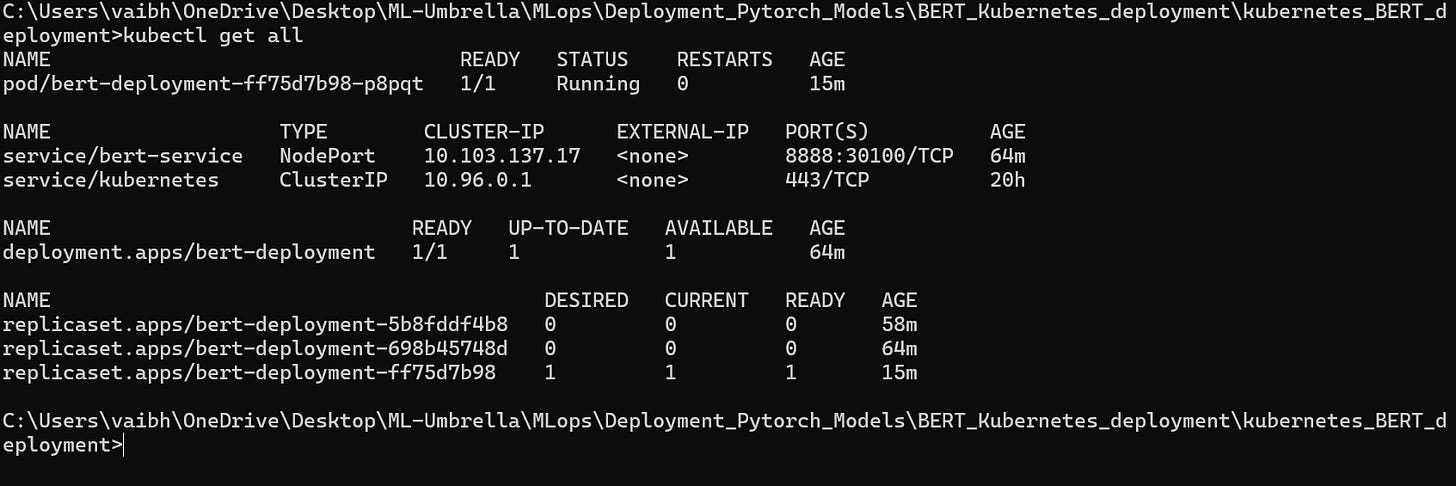

Setting up minikube and running pods using kubectl and deployment.yaml

minikube start

kubectl apply -f deployment.yaml Final Testing:

kubectl get allIt took around 15 mins to pull and create container pods.

kubectl image listkubectl get svcminikube service bert-serviceAfter running the last command minikube service bert-service, you can verify the result deployment on web endpoint.

Find the Github Link: https://github.com/vaibhawkhemka/ML-Umbrella/tree/main/MLops/Model_Deployment/Bert_Kubernetes_deployment

If you have any questions, ping me on my LinkedIn: https://www.linkedin.com/in/vaibhaw-khemka-a92156176/

Follow ML Umbrella for more such detailed actionable projects.

Future Extension:

Scaling with pod replicas and load balancer -

Self healing